Visual language is the type of interaction that counts on pictorial signs beyond text to communicate info. It is common in our digital life in the type of iconography, infographics, tables, plots, and charts, encompassing the real life in street indications, comics, food labels, and so on. Because of that, having computer systems much better comprehend this kind of media can aid with clinical interaction and discovery, ease of access, and information openness.

While computer system vision designs have actually made significant development utilizing learning-based services given that the development of ImageNet, the focus has actually been on natural images, where all sorts of jobs, such as category, visual concern answering (VQA), captioning, detection and division, have actually been specified, studied and in many cases advanced to reach human efficiency. Nevertheless, visual language has actually not amassed a comparable level of attention, potentially since of the absence of massive training sets in this area. However over the last couple of years, brand-new scholastic datasets have actually been developed with the objective of assessing concern answering systems on visual language images, like PlotQA, InfographicsVQA, and ChartQA

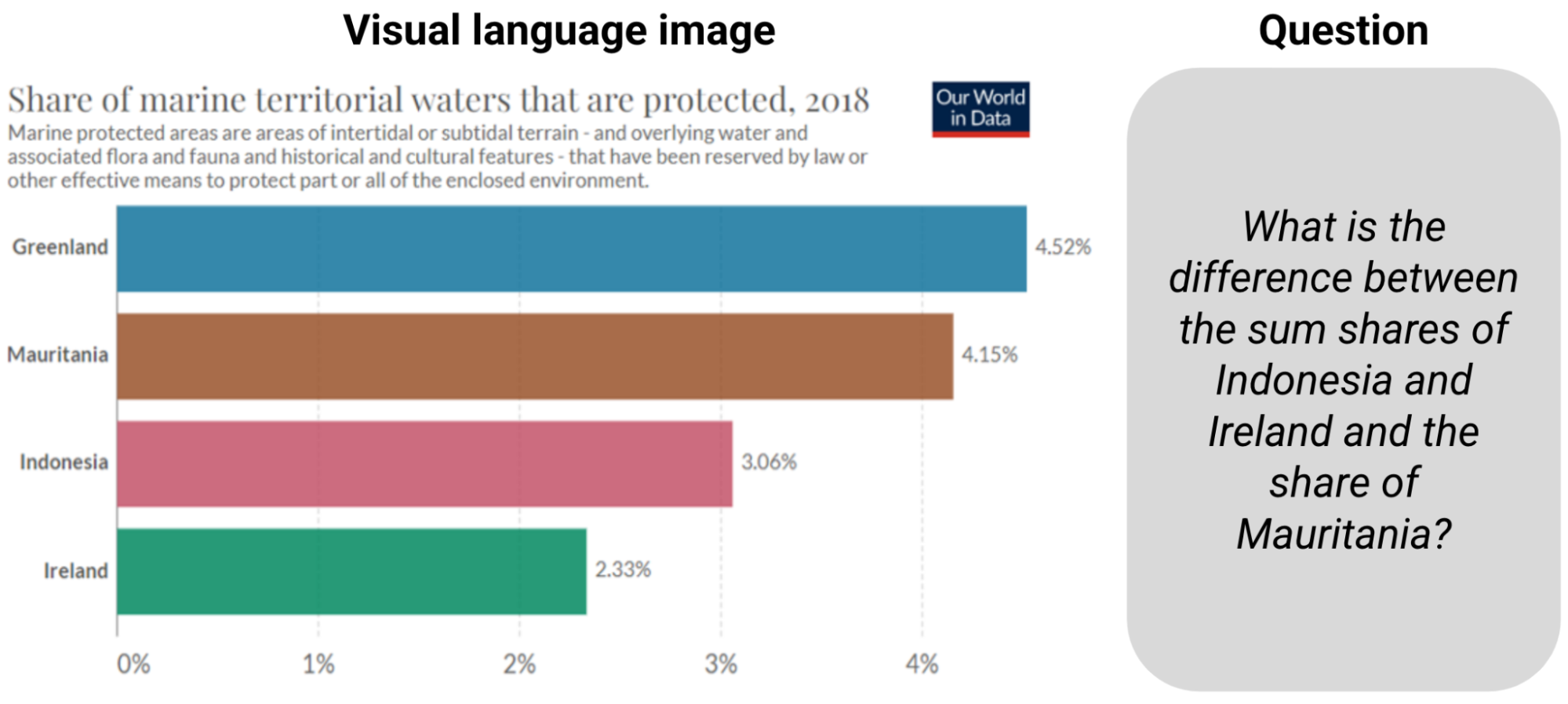

|

| Example from ChartQA Addressing the concern needs checking out the info and calculating the amount and the distinction. |

Existing designs developed for these jobs depend on incorporating optical character acknowledgment (OCR) info and their collaborates into bigger pipelines however the procedure is mistake vulnerable, sluggish, and generalizes improperly. The frequency of these techniques was since existing end-to-end computer system vision designs based upon convolutional neural networks (CNNs) or transformers pre-trained on natural images might not be quickly adjusted to visual language. However existing designs are ill-prepared for the obstacles in addressing concerns on charts, consisting of checking out the relative height of bars or the angle of pieces in pie charts, comprehending axis scales, properly mapping pictograms with their legend worths with colors, sizes and textures, and lastly carrying out mathematical operations with the drawn out numbers.

Because of these obstacles, we propose “ MatCha: Enhancing Visual Language Pretraining with Mathematics Thinking and Chart Derendering“. MatCha, which means mathematics and charts, is a pixels-to-text structure design (a pre-trained design with integrated inductive predispositions that can be fine-tuned for several applications) trained on 2 complementary jobs: (a) chart de-rendering and (b) mathematics thinking. In chart de-rendering, provided a plot or chart, the image-to-text design is needed to produce its underlying information table or the code utilized to render it. For mathematics thinking pre-training, we choose textual mathematical thinking datasets and render the input into images, which the image-to-text design requires to decipher for responses. We likewise propose “ DePlot: One-shot visual language thinking by plot-to-table translation“, a design developed on top of MatCha for one-shot thinking on charts by means of translation to tables. With these techniques we go beyond the previous cutting-edge in ChartQA by more than 20% and match the very best summarization systems that have 1000 times more specifications. Both documents will exist at ACL2023

Chart de-rendering

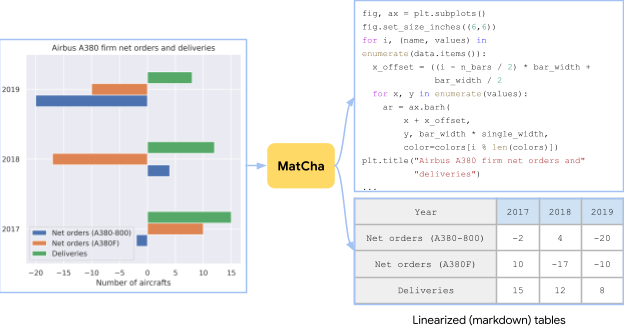

Plots and charts are normally created by an underlying information table and a piece of code. The code specifies the general design of the figure (e.g., type, instructions, color/shape plan) and the underlying information table develops the real numbers and their groupings. Both the information and code are sent out to a compiler/rendering engine to develop the last image. To comprehend a chart, one requires to find the visual patterns in the image and efficiently parse and organize them to draw out the crucial info. Reversing the plot rendering procedure needs all such abilities and can hence function as a perfect pre-training job.

|

| A chart developed from a table in the Airplane A380 Wikipedia page utilizing random outlining choices. The pre-training job for MatCha includes recuperating the source table or the source code from the image. |

In practice, it is challenging to at the same time get charts, their hidden information tables, and their rendering code. To gather adequate pre-training information, we individually collect [chart, code] and [chart, table] sets. For [chart, code], we crawl all GitHub IPython note pads with proper licenses and extract obstructs with figures. A figure and the code obstruct right prior to it are conserved as a [chart, code] set. For [chart, table] sets, we checked out 2 sources. For the very first source, artificial information, we by hand compose code to transform web-crawled Wikipedia tables from the TaPas codebase to charts. We tested from and integrated a number of outlining choices depending upon the column types. In addition, we likewise include [chart, table] sets created in PlotQA to diversify the pre-training corpus. The 2nd source is web-crawled [chart, table] sets. We straight utilize the [chart, table] sets crawled in the ChartQA training set, consisting of around 20k sets in overall from 4 sites: Statista, Bench, Our World in Information, and OECD

Mathematics thinking

We include mathematical thinking understanding into MatCha by finding out mathematics thinking abilities from textual mathematics datasets. We utilize 2 existing textual mathematics thinking datasets, MATHEMATICS and DROP for pre-training. Mathematics is artificially developed, consisting of 2 million training examples per module (type) of concerns. DROP is a reading-comprehension– design QA dataset where the input is a paragraph context and a concern.

To resolve concerns in DROP, the design requires to check out the paragraph, extract pertinent numbers and carry out mathematical calculation. We discovered both datasets to be complementary. Mathematics includes a a great deal of concerns throughout various classifications, which assists us determine mathematics operations required to clearly inject into the design. DROP’s reading-comprehension format looks like the normal QA format in which designs at the same time carry out info extraction and thinking. In practice, we render inputs of both datasets into images. The design is trained to decipher the response.

|

| To enhance the mathematics thinking abilities of MatCha we include examples from mathematics and DROP into the pre-training goal, by rendering the input text as images. |

End-to-end outcomes

We utilize a Pix2Struct design foundation, which is an image-to-text transformer customized for site understanding, and pre-train it with the 2 jobs explained above. We show the strengths of MatCha by fine-tuning it on a number of visual language jobs– jobs including charts and plots for concern answering and summarization where no access to the underlying table is possible. MatCha goes beyond previous designs’ efficiency by a big margin and likewise surpasses the previous cutting-edge, which presumes access to underlying tables.

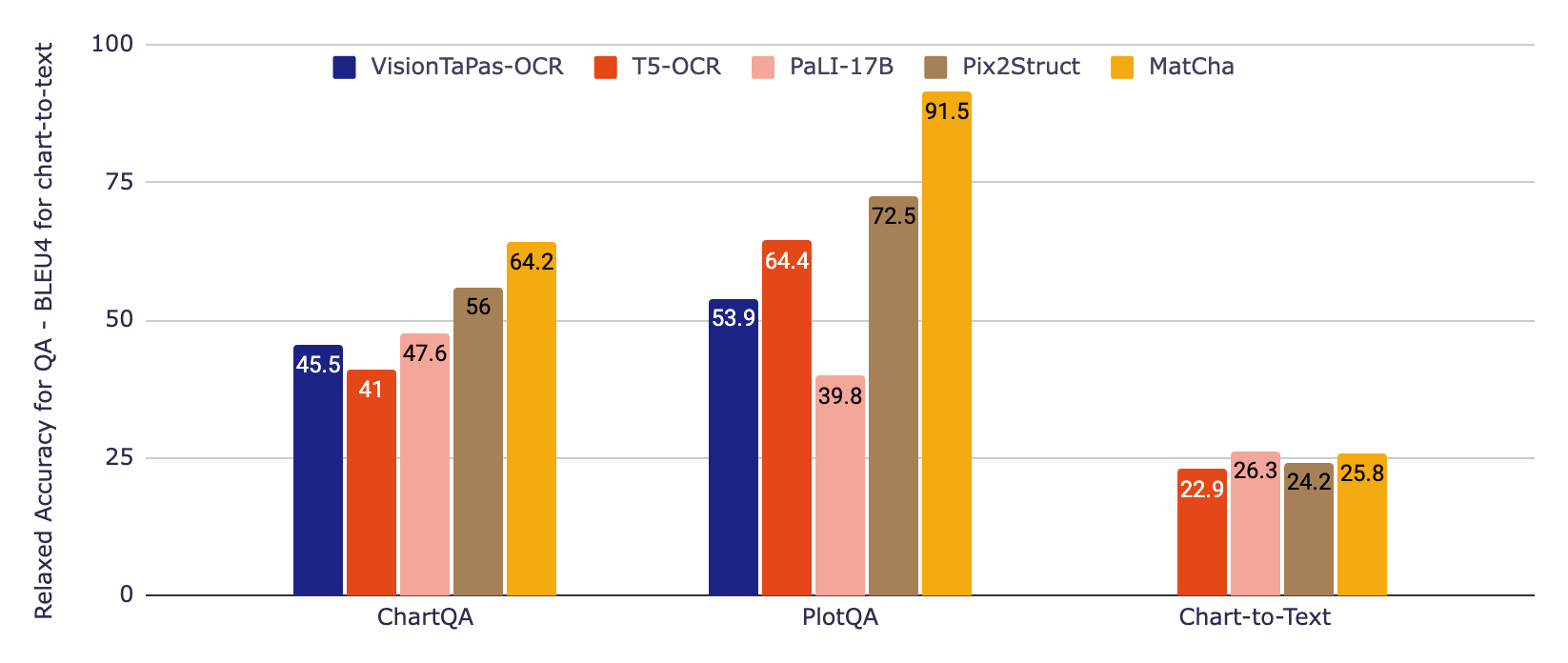

In the figure listed below, we initially assess 2 standard designs that include info from an OCR pipeline, which up until just recently was the basic technique for dealing with charts. The very first is based upon T5, the 2nd on VisionTaPas We likewise compare versus PaLI-17B, which is a big (~ 1000 times bigger than the other designs) image plus text-to-text transformer trained on a varied set of jobs however with minimal abilities for checking out text and other types of visual language. Lastly, we report the Pix2Struct and MatCha design results.

For QA datasets, we utilize the main unwinded precision metric that permits little relative mistakes in mathematical outputs. For chart-to-text summarization, we report BLEU ratings. MatCha accomplishes significantly enhanced outcomes compared to standards for concern answering, and equivalent outcomes to PaLI in summarization, where plus size and comprehensive long text/captioning generation pre-training are useful for this type of long-form text generation.

Derendering plus big language design chains

While very performant for their variety of specifications, especially on extractive jobs, we observed that fine-tuned MatCha designs might still deal with end-to-end complex thinking (e.g., mathematical operations including great deals or several actions). Therefore, we likewise propose a two-step approach to tackle this: 1) a design checks out a chart, then outputs the underlying table, 2) a big language design (LLM) reads this output and after that attempts to address the concern entirely based upon the textual input.

For the very first design, we fine-tuned MatCha entirely on the chart-to-table job, increasing the output series length to ensure it might recuperate all or the majority of the info in the chart. DePlot is the resulting design. In the 2nd phase, any LLM (such as FlanPaLM or Codex) can be utilized for the job, and we can depend on the basic techniques to increase efficiency on LLMs, for instance chain-of-thought and self-consistency We likewise try out program-of-thoughts where the design produces executable Python code to unload complicated calculations.

|

| An illustration of the DePlot+ LLM approach. This is a genuine example utilizing FlanPaLM and Codex The blue boxes are input to the LLM and the red boxes consist of the response created by the LLMs. We highlight a few of the crucial thinking actions in each response. |

As displayed in the example above, the DePlot design in mix with LLMs surpasses fine-tuned designs by a substantial margin, specifically so in the human-sourced part of ChartQA, where the concerns are more natural however need harder thinking. Additionally, DePlot+ LLM can do so without access to any training information.

We have actually launched the brand-new designs and code at our GitHub repo, where you can attempt it out yourself in colab. Checkout the documents for MatCha and DePlot for more information on the speculative outcomes. We hope that our outcomes can benefit the research study neighborhood and make the info in charts and plots more available to everybody.

Recognitions

This work was performed by Fangyu Liu, Julian Martin Eisenschlos, Francesco Piccinno, Syrine Krichene, Chenxi Pang, Kenton Lee, Mandar Joshi, Wenhu Chen and Yasemin Altun from our Language Group as part of Fangyu’s internship job. Nigel Collier from Cambridge likewise was a partner. We wish to thank Joshua Howland, Alex Polozov, Shrestha Basu Mallick, Massimo Nicosia and William Cohen for their important remarks and tips.